Chapter 7 Searching and Filtering

One of the most common and important uses of computer programs is to search a large set of data for a specific record or observation—indeed, a significant portion of what computers do is search through data. This chapter covers a number of common patterns and algorithms used when searching through lists of data in order to answer questions about that data. Note that this chapter introduces no new syntax, but instead provides a deeper look at using loops and lists. The first two sections illustrates common interactions with lists, while the last considers at a high level the efficiency of algorithms.

7.1 Linear Search

Fundamentally, search algorithms are used to “find” a particular item in list: given a very large list of elements, the goal of the search is to determine whether the list contains the “target” item, and if so where in the list that element is. Thus basic search algorithms are used to answer the questions is an item in a list? or which element in the list is the item?.

Python does contain built-in operators and list methods (e.g., in, index(), max() etc.) that can answer simple versions of these questions. However, more complex programs may require you to create your own “custom” searches following the patterns described here.

The most basic algorithm you can use to answer these questions is called a linear search. Intuitively, this search takes all of the elements in a list, and then goes down the “line” of elements one after another, checking if each element in turn is the target item. (Think: “Are you who I’m looking for?” “No” “Are you who I’m looking for?” “No”, “Are you who I’m looking for?” “Yes!”). If you get through the entire line of items without finding the target (without anyone answering “yes”), then you know that the target is not in the list (because you checked everyone)!

This kind of search involves just a simple for loop (to consider every element) and if statement (to check if the item is the target):

def linear_in(a_list, target):

"""Searches the given list for the given target value.

Returns whether or not the target is in the list"""

for element in a_list: # go through each element

if element == target: # check that element

return True # if found, report so!

return False # looked at everyone but didn't find, report back

# Example:

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

print( linear_in(numbers, 11) ) # True, 11 in list

print( linear_in(numbers, 12) ) # False, 12 not in listPay careful attention to the position of the return statements! First, you return True if (when) the target is found—this “exits” the function so you do not keep searching once you’ve found your target. The second return statement occurs after the loop has entirely finished. You need to check every item in the list before you can conclusively say that the item isn’t there (otherwise you may have just missed it).

A common error is include an else clause that returns or stores that the item was not found. However, returning False makes the statement “none of the elements in the list is the target” which is dependent on the entire list: looking at a single item won’t let you make that claim! Contrast this with returning True, which makes the statement “one of the elements in the list is the target”, which can be proved by considering just one item (e.g., the one).

While a useful organizing tool, a linear search does not need to be implemented as its own function if you instead use a variable to track whether the item is found or not:

is_found = False # has not been found when we start looking

for element in a_list: # go through each element

if element == target: # check that element

is_found = True # mark as found!To determine which element in the list is the target, you use the same structure but consider the index of each element, returning that index when the item is found. By convention, if the target is not in the list, you can return -1 (which will be an out-of-bounds index for any list):

def linear_search(a_list, target):

"""Searches the given list for the given target value.

Returns the index of the target, or -1 if target not in the list"""

for index in range(len(a_list)): # go through each element

if a_list[index] == target: # check that element

return index # if found, report the index

return -1 # looked at everyone but didn't find, report back

# Example:

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

print( linear_search(numbers, 11) ) # 4

print( linear_search(numbers, 12) ) # -1

print( linear_search(numbers, 11) >= 0 ) # True, 11 in list

print( linear_search(numbers, 12) >= 0 ) # False, 12 not in list7.1.1 Maximal Search

Another common item to search for is the “biggest” (or “smallest”) element in the list. This may be the biggest number, the longest word, the slowest turtle, the highest-scoring sports game… any element that has a “greater” ordinal value (e.g., it comes “first” in some ordered listing, whether that ordering is ascending or descending). Whenever you are searching for the “-est” item in a list, you can use the same variant on a linear search.

I refer to this variant as a “king-of-the-hill” search, named after the children’s game. In this algorithm, start by declaring an initial element (often the first in the list) as the “king”—the “greatest” value in the list. The algorithm then goes down the “line” of elements, having each one in turn “challenge” the king. If the challenging value is greater, then that value becomes the new king, and the process continues. Whichever item is the king at the end must be the “greatest” item that was being searched for.

This algorithm is implemented with a similar structure to the basic linear search, except instead of comparing to the target, you compare to the current “king”:

def maximum(a_list):

"""Returns the element with the maximum value in the list."""

maximum = a_list[0] # first person starts as the "king"

for element in a_list: # go through each element

if element > maximum: # challenge the king

maximum = element # if won, become the new king

return maximum # in the end, return who is left standing

# Example:

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

print( maximum(numbers) ) # 18The most common error with this algorithm is not comparing to the previous maximum, instead trying to compare to e.g, the previous element in the list.

Note that it is also possible to do this same search by using the built-in max() function and specifying an ordering function as an argument, which converts any element into a value with the proper ordering. Using functions as arguments is discussed in a later chapter.

To reiterate, this function can be used to find any extreme value simply by changing the “challenge” comparison. For example use < instead of > to find the “minimum” item, or use a more complex boolean expression to compare dictionary “rows” in a data table.

7.1.2 Falsification Search

Many searches are interested in determining if all elements in a list meet a certain criteria. For example, determining if all the numbers are greater than 10, if all of the words are less than 3 syllables, if all of the turtles are running at speed, or if the home team won all of their games.

But loops are only able to consider one element at a time, not “all” the elements at once. So in order to answer these questions, you need to invert the question: saying “all numbers are greater than 10” is logically equivalent to saying “no number is less than (or equal to) 10”.

(Somewhat counter-intuitively, the logical negation of an “all are” predicate is not “none are” but “one is not”). That is, the opposite of “all days are sunny” is not “no days are sunny” but “(at least) one day was not sunny”.

By inverting the question into a search for a counter-example, you can utilize the previous linear search pattern:

def all_larger(a_list, minimum):

"""Returns whether all of the elements in the list are larger than the

given minimum value."""

all_are_large = True # every number we've looked at is large

for element in a_list:

if not (element > minimum): # written as counter-example

# equivalent to `element <= minimum`

all_are_large = False # counterexample found! Statement no longer valid

break # "exits" from the loop, since don't need to search more

return all_are_large # report back

# Example:

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

print( all_larger(numbers, 10) ) # False, some numbers are smaller than 10

print( all_larger(numbers, 2) ) # True, all numbers are greater than 2

Careful variable naming (e.g., all_are_large) for keeping track of boolean claims is vital to being able to read and write these algorithms! Just calling the variable e.g., large can make it confusing to understand what your logic is doing.

Overall, a linear search is a simple and versatile algorithm, but you need to be careful to use the right variation based on the question being asked.

7.2 Filtering

In addition to searching data for a specific item, you will often want to search a data set for all items that meet some criteria. For example, you want to find all items numbers that are large, or all sports games that the home team one, or all outliers in a medical diagnoses. This is called filtering for a set of data. Filtering is one of the most common steps used in data analysis; indeed the searching described in the previous section can be seen as a specialized filtering (where you’re filtering for a single element).

Importantly, when we talk about filtering in programming, we think about what items we want to select or keep, rather than what items we want to discard. We don’t try to “filter out the small numbers”, instead we try to “filter (select) for the big numbers”. A filter is about what is let in (a whitelist), not about what is excluded.

The simplest way to filter a data set is to use a loop similar to a linear search, but instead of finding a single element, you construct a new list that contains the elements that “pass” the filter and thus that you want to keep. This follows the basic template:

result_list = [] # initialize new list

for value in original_sequence: # loop through the items

if value meets criteria: # if item "passes" the filter and should be kept

result_list.append(value) # add value to new listAs a concrete example, you can filter a list of numbers for those that are “large” (e.g., greater than 10):

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

large_numbers = [] # the list for the items to find

for number in numbers: # loop through the values

if(number > 10): # check if the value

large_numbers.append(number)

print(large_numbers) # [17, 18, 11, 16, 13]There are two important things to notice in this example.

First, filtering involves constructing a new list or sequence. This makes the code easier to write and process (you don’t need to worry about deleting things from the middle of a list), and is best practice in doing data analysis because you won’t modify or “lose” your original data, so that you can perform different filters on it (e.g., you could also filter for which numbers are even).

Second, the if statement’s expression checks if the value meets the desired criteria and should be added to the new list, not whether it should be skipped or removed. Filtering is a “positive” process. The filter criteria (the boolean expression) can be as complex as needed; you can check multiple criteria by using the and or or operators. And of course you can include additional statements or if statements if that helps make your code more understandable:

i_words = []

for word in word_list:

lower_case_word = word.lower() # format for consideration

if(lower_case_word.startswith('i')): # check starting letter

if(not lower_case_word in i_words): # don't keep if already in list

chosen_words.append(word) # keep original wordOf course, to use a more Pythonic approach you can do filtering using a list comprehension:

numbers = [17, 18, 3, 7, 11, 16, 13, 4] # the list to search

large_nubmers = [number for number in numbers if number > 0]

print(large_numbers) # [17, 18, 11, 16, 13]Using an if statement in a list comprehensino performs a filtering operation!

Note that The i_words example cannot be written as a list comprehension, because the filtering work is dependent on the previous results—it is not considered a “pure” operation.

7.3 Mapping

You can’t talk about filtering without also mentioning its cousin, mapping. A mapping operation is one that takes an original list (e.g., of numbers) and produces a new list with each of the original elements transformed in a certain way (e.g., rounded). The operation “maps” each number into a new value in the new list. This is a common operation to apply: maybe you want to “transform” a list so that all the values are rounded or lowercase, or you want to map a list of words to a list of their lengths.

Mapping can be done using a similar looping process to filtering, but you add a transformed value to the new list (instead of the original value if it meets a filtering criteria):

numbers = [1.3, 2.7, 3.14, 4.8, 5.99] # the list to map

rounded_numbers = [] # a new list for the mapped values

for number in numbers: # loop through the original list

transformed = round(number) # map the individual item

rounded_numbers.append(transformed) # add transformed value to new list.

print(rounded_numbers) # [1, 3, 3, 5, 6]You might recognize this example—this kind of process is exactly what a list comprehension does! In fact, a list comprehension is a mapping operation—every comprehension is doing some kind of mapping (and likely some filtering at the same time):

numbers = [1.3, 2.7, 3.14, 4.8, 5.99] # the list to map

rounded_numbers = [round(number) for number in numbers]

print(rounded_numbers) # [1, 3, 3, 5, 6]7.4 Search Efficiency

Searching is something that you do a lot (and on bigger and bigger data sets!), so it’s worth considering: how fast is this process? How efficient is the algorithm? Is there possibly a more efficient way of searching?

Warning: AVOID PRE-MATURE OPTIMIZATION!! While this section and chapter discuss the “speed” of computer programs, you should avoid spending too much (or any!) time trying to make your program “as fast as possible”. The first step in any computer program is to make it function at all. Once you have it working, then you can worry about increasing the efficiency—and only if it is currently too slow for your purposes. Pre-mature optimization (trying to make it work fast before you make it work at all) is a major source of bugs and other problems.

One way of measuring the speed of an algorithm would be to time it, such as by using a stopwatch. Python includes modules that can be used to record the time, allowing you to get the “start” and “stop” time of the algorithm, and then calculate the elapsed duration. However, this wall-clock efficiency is highly dependent on the exact list being searched and on the computer that is executing the algorithm—if you’re also streaming videos while searching, the algorithm may run slower!

Instead, computer scientists measure the efficiency of a program by counting the number of operations that the algorithm does. This number will be independent of the data and machine, and so makes it easier to compare approaches. Specifically, to measure the efficiency of a search, you would consider the number of comparisons that need to be made between elements (e.g., how many elements you check before you find what you’re looking for), under the assumption that this is the most time-consuming part of the computer’s search algorithm.

7.4.1 Linear Search Speed

Linear search involves looking at each item in the list one at a time, so the number of “checks” that need to be made is dependent on the size of the list. And because the item you’re looking for may be either be at the beginning of the list (meaning you don’t search for very long) or at the end, you can consider both the “average” case (when it’s in the middle), as well as the “worst” case (when it’s at the end or not in the list at all!):

| len(list) | # comparisons (avg) | # comparisons (worst) |

|---|---|---|

| 10 | 5 | 10 |

| 20 | 10 | 20 |

| 50 | 25 | 50 |

| 100 | 50 | 100 |

| 1000 | 500 | 1000 |

N |

N/2 |

N |

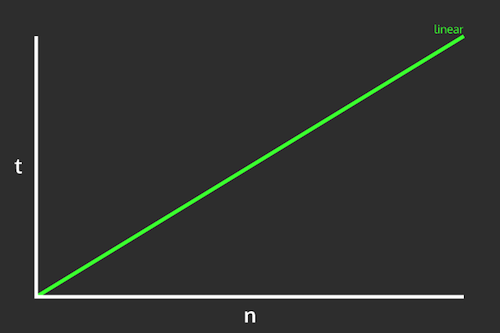

These numbers should be somewhat intuitive: because you’re looking at each element in a line, in the average case you need to look at half of the elements, and in the worst case you need to look at all of them! As such, the number of comparisons you need to make (and thus the efficiency of the algorithm) is a linear function of the size of the list:

Linear time complexity. Image by Nick Salloum.

This is in fact why it is called a linear search!

In general, we measure algorithm efficiency (or more properly, algorithmic complexity) in terms of the rate of change in the speed: that is, if you double the size of the input list, by what ratio does the work you need to do increase? With a linear search, doubling the size of the list will double the amount of work to do.

Note that looking up an element by its index or key is a constant function—it takes the same amount of time no matter how big the list or dictionary is. This is part of why dictionaries are so useful as look-up tables: you don’t need to spend time searching for the value if it you have its key!

7.4.2 Faster Searching: Binary Search

There are alternate, faster algorithms for searching through lists that can be useful as the data set gets large.

As an example, consider how you might search for a name in a phone book (or a word in an encyclopedia): rather than starting from “A” and going one by one though the book, you flip it open to the middle. If the name you’re after comes later in the alphabet than the page you opened to, then you take the “back half” of the book and flip to the middle of that (otherwise, you flip to the middle of the “front half”). You repeat this process, narrowing the number of pages you need to consider more and more until you’ve found the name you are looking for!

What makes this work is the fact that names in the phone book are ordered: after doing a comparison (checking the name against the page), you know whether to go forward or backwards to find the target.

This algorithm is known as a binary search, and it lets you search an ordered list by comparing to the middle, and reducing the list to only the top or bottom half, and repeating:

def binary_search(a_list, target):

"""Searches the given SORTED list for the given target value.

Returns the index of the target, or -1 if target not in the list"""

start_index = 0 # initial goalposts

end_index = len(a_list)-1

while start_index <= end_index: # at least one thing to look at

middle_index = (start_index + end_index) // 2 # middle (integer) index

if(a_list[middle_index] == target):

return middle_index # found the item!

elif target > a_list[middle_index]:

start_index = middle_index+1 # move goalpost

else:

end_index = middle_index-1 # move goalpost

return -1 # did not find the itemThis algorithm starts by considering the entire list (with the “goalposts” at either end. It then looks at the middle element. If that isn’t the target, then it moves the appropriate goalpost to that middle spot, thereby throwing away the half of the list and narrowing the search field. This continues until the target is found or the goalposts have moved “past” one another, at which point the search ends. See this animation for an example of how it works.

(Note that this is an example of indefinite iteration: you don’t know how many times you’ll need to cut the list in half before searching, so you use a while loop!)

So how fast is a binary search? Using a timer will demonstrate that binary search is much faster than linear search (as the list gets large), but how much faster?

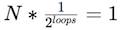

The intuition behind the speed of a binary search is as follows: Each time through the loop (each “comparison”) effectively lets you reduce the size of the list by half. So if there are N items in the list, the first time through the loop reduces the list to N*(1/2) items, the second time through reduces is to N*(1/2)*(1/2), etc. In the worst case, you will need to cut the list in half until you have reduced the list to exactly one item, or:

We can solve this equation for the number of loops:

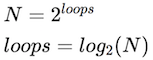

Thus binary search’s speed is a logarithmic function of the size of the list: that is, if you double the size of the list, the number of comparisons you need to do (the number of times through the loop) increases by just 1.

Logarithmic time complexity. Image by Nick Salloum.

This is drastically faster than a linear search…. however, it requires a list be sorted for it to work!

7.4.3 Slower Algorithms: Sorting

There are many, many different algorithms for sorting numbers, many of which have amusing names given to them by computer scientists (Python’s built-in sorted() method uses one called Timsort, named after the man who invented it). This chapter will discuss just one straightforward example for illustration purposes.

One such algorithm (Selection Sort) utilizes the “king-of-the-hill” search described above. This algorithm works as follows: search for (“select”) the smallest item in the list. Because it is the smallest, it must be the first item in the sorted list, and can be placed there. Next, select the second-smallest item in the list (the smallest of the “unsorted” items), and place that second in the “sorted” list. Continue with this process until the entire list is sorted!

def selection_sort(a_list):

"""Sorts the list (in place)"""

for i in range(len(a_list)): # go through each spot in the list

# Do a "king-of-the-hill" search of the remaining items

selected_index = i

for j in range(i, len(a_list)):

if(a_list[j] < a_list[selected_index]):

selected_index = j

# swap smallest into place (multi-assignment!)

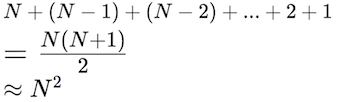

a_list[i], a_list[selected_index] = a_list[selected_index], a_list[i]To determine the speed of the selection sort algorithm, notice that the first time through the loop requires considering N different items (the whole loop). The second time requires checking N-1 items, the third time N-2 items, and so forth until the last time through the loop you only need to compare 2 then 1 items. These checks can then be summed into a series:

Selection sort’s speed is a quadratic function of the size of the list: that is, if you double the size of the list, the number of comparisons you need to do quadruples!.

Faster sorting algorithms (like Python’s Timsort) get this speed down to “fast” N*log2(N) (“loglinear”), which is much better than quadratic algorithms but still notably slower than linear algorithms.

For large lists, sorting a list is slower than just using a linear search on it… but once that list is sorted, you can use the ultra-fast binary search! This is a tradeoff that needs to be considered when trying to improve the efficiency of programs that work on large data sets: sometimes you need to spend some extra time up front to prepare (sort) the data, in order to be able to utilize (search) it more effectively.

Resources

- List Algorithms (Downey), Sections 14.1 - 14.7

- What is Algorithm Analysis? and Big-O Notation